LLMs Vs. Geolocation: GPT-5 Performs Worse Than Other AI Models

In June, Bellingcat ran 500 geolocation tests, comparing LLMs from various companies against each other, as well as Google Lens – a staple tool for finding the location of photos.

At the time, ChatGPT o4-mini-high emerged as the clear winner, with Google Lens outperforming most other models. Just two months later, with new versions of these AI tools available, we re-ran the trial – this time including Google “AI Mode,” GPT-5, GPT-5 Thinking, and Grok 4 into the mix.

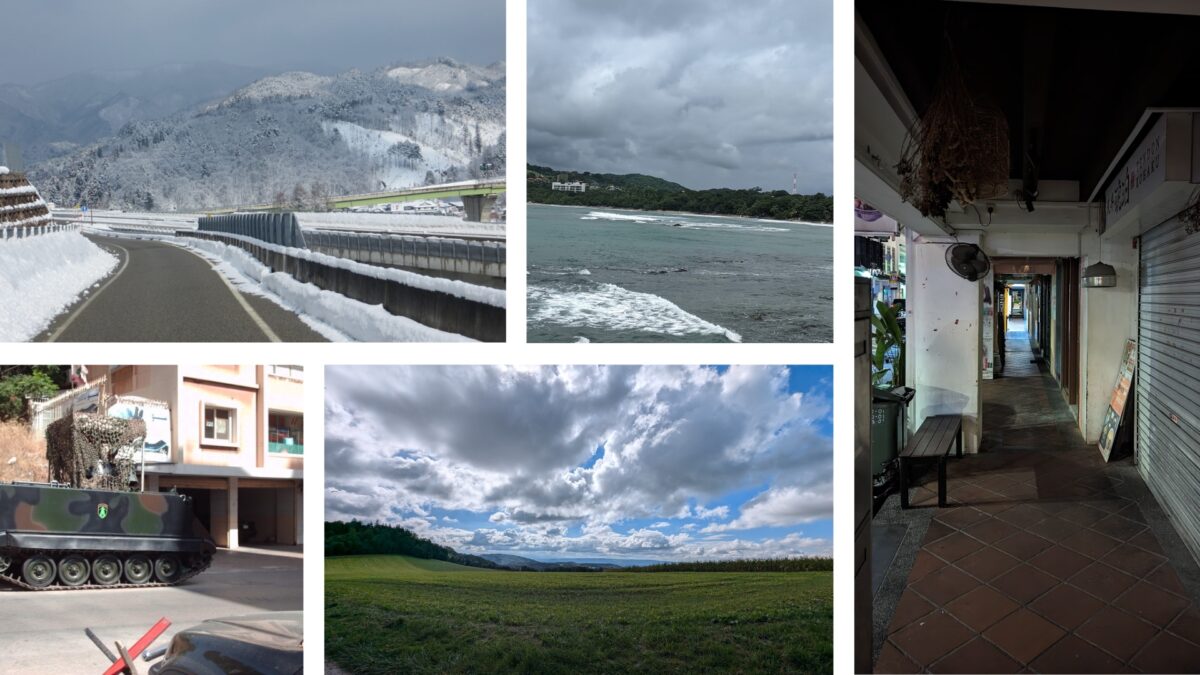

The original test used 25 of Bellingcat’s own holiday photos. From cities to remote countryside, the images included scenes both with and without recognisable features – such as roads, signage, mountains, or architecture. Images were sourced from every continent.

For the updated trial, five test photos were excluded, as they had appeared in a previous article, thus compromising the integrity of the results.

All 24 models’ responses were ranked on a scale from 0 to 10, with 10 indicating an accurate and specific identification (such as a neighbourhood, trail, or landmark) and 0 indicating no attempt to identify the location at all.

Google AI Mode was shown to be the most capable geolocation tool overall.

Grok 4 gave both better and worse answers compared to Grok 3 but, on average, scored marginally higher. However, it was still less accurate than older versions of Gemini and GPT.

GPT-5, even in ‘Thinking’ and ‘Pro’ modes, was a considerable downgrade when compared with the capabilities demonstrated by GPT o4-mini-high. In one example, of a city street with skyscrapers in the background, o4-mini-high correctly identified the street, while GPT-5 in Thinking mode pointed to the wrong country.

Support Bellingcat

Your donations directly contribute to our ability to publish groundbreaking investigations and uncover wrongdoing around the world.

Despite delivering faster answers, GPT-5 appeared to sacrifice accuracy. A surprising number of errors and a general sense of disappointment in the new model have also been reported by other users.

Bellingcat tested GPT-5 and its ‘Thinking’ mode via the Plus subscription, which costs roughly the same as access to 04-mini-high prior to its retirement. Five of the most difficult test images were also run through GPT-5 Pro. But even Pro, with a premium price tag of €200 per month, failed to geolocate the photos any more accurately than GPT 04-mini-high.

A Beach, a Hotel and a Ferris Wheel

The disparity between Google and the GPT models became even more apparent in Test 25 – a photo of a shoreline hotel in Noordwijk, the Netherlands, with a Ferris wheel rising just beyond the dunes.

In the previous trial, most older models – including those from GPT, Claude, Gemini and Grok – accurately identified the country as the Netherlands but failed to locate the town. Many latched onto the Ferris wheel but pointed instead to the seaside town of Scheveningen, which also has a Ferris wheel, though situated on a pier, not among the sand dunes.

However, the most recent models, GPT-5 Pro and Thinking, were even less accurate, identifying a beach in France – an entirely different country.

Unfortunately for open source researchers, following the release of GPT-5, OpenAI removed the option to select older models such as o4-mini-high. After a wave of negative feedback, OpenAI reinstated GPT-4o as the default model for paid subscribers. However, the most capable geolocation models identified in Bellingcat’s testing remain inaccessible.

Google AI Mode, on the other hand, was the first, and only model so far, to correctly identify Noordwijk as the location in Test 25.

Though AI Mode is powered by a version of Gemini 2.5, it outperformed Gemini 2.5 Pro Deep Research in these tests. Described by Google as its “most powerful AI search, with more advanced reasoning and multimodality,” AI Mode geolocated test images with greater accuracy than any GPT models, including our previous winner, o4-mini-high.

AI Mode is currently only available in India, United Kingdom and the United States.

The majority of models, at some point, returned a hallucination. Users should not rely solely on the answers provided by LLMs. Even the best options, including Google AI Mode, still, at times, confidently point to the wrong location.

The difference in models’ capabilities compared with just two months ago shows how quickly this field is evolving. However, OpenAI’s recent changes also suggest that progress is not guaranteed, and that AI’s ability to geolocate may plateau or even worsen over time. As new models emerge, Bellingcat will continue to test them.

Thanks to Nathan Patin for contributing to the original benchmark tests.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Bluesky here and Instagram here.