The Open Source Tool That Has Preserved 150,000 Pieces of Online Evidence

Bellingcat’s Auto Archiver is a tool aimed at preserving online digital content before it can be modified, deleted or taken down. Publicly launched in 2022, it has preserved over 150,000 web pages and social media posts to date. The Auto Archiver has been used by Bellingcat’s journalists to preserve information on dozens of fast moving events such as the Jan. 6 riots – when we first used the tool internally – as well as gather digital evidence for our Justice and Accountability project and to monitor Civilian Harm in Ukraine.

The Auto Archiver has also been adopted by both large newsrooms and NGOs. It has been used by individual researchers, journalists, activists, archivists, academics and developers as well. With interest in the tool strong, we have worked hard to add to and improve it over time. But we have used the past few months to take a step back and to build a new and more robust ecosystem to further help individual organisations and researchers use and benefit from it.

Our aim has been to make it more reliable and even easier to use for more people. Today, we are happy to announce an updated version of the Auto Archiver which includes many new features like:

- Detailed documentation for all features and configurations

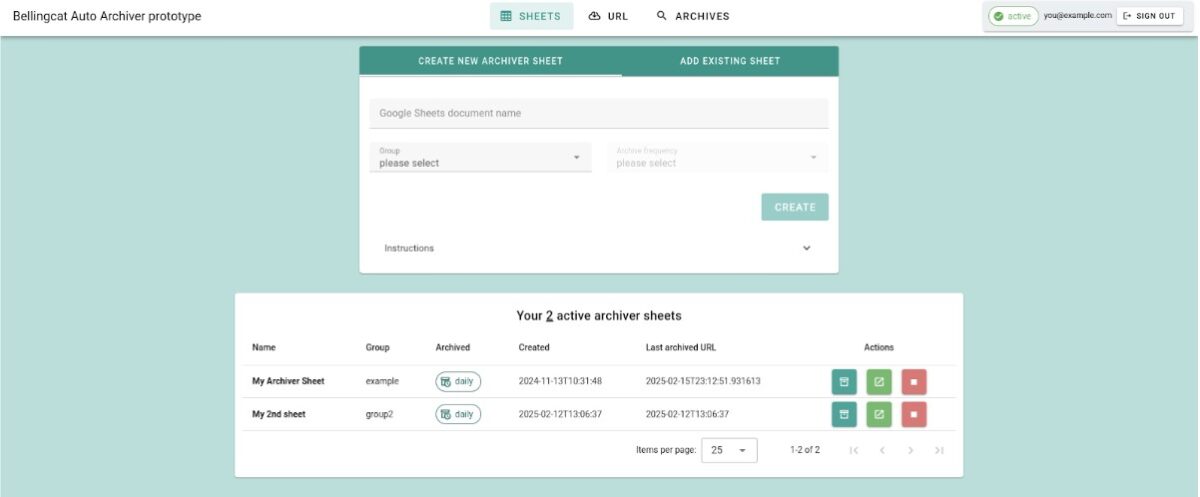

- A user-friendly interface designed for teams using a shared instance

- A new modular structure that improves the startup speed and reliability of the tool

- New features like chain of custody, perceptual hashing for deduplication, and techniques to avoid anti-bot measures and captchas on websites

- A user-friendly tool to configure the Auto Archiver, without the need to edit configuration text files

For an in-depth look at the changes made in this stable version of the Auto Archiver, see the What Changed, What Remains section further down in this article.

Automated Archiving and Collaboration – When to Use This Tool?

The latest version of the Auto Archiver has an easy-to-use web interface and a simplified installation process that makes it more straightforward to set up than before. However, some technical skills are still required for this initial process, and there are other tools available that could meet many of your archiving needs.

Support Bellingcat

Your donations directly contribute to our ability to publish groundbreaking investigations and uncover wrongdoing around the world.

If all you need is to archive a few unauthenticated URLs, we recommend using the Wayback Machine or Archive.today. Alternatively, WebRecorder’s browser extension ArchiveWebPage can create a replayable archive of a website you visit – even for content behind login walls. For batch processing, the Wayback Machine has a bulk upload service that accepts Google Sheets. If you individually need to record all your browser interactions and store content along the way there are paid options like Hunchly. Finally, if all you are interested in are videos and are comfortable with the command line, yt-dlp will probably be enough to download those, even in bulk.

But if you’re hoping to automate your archiving, or archive a large number of URLs in a collaborative environment, then this is where the Auto Archiver really shines. Its modular framework allows you or your team to customise archiving based on your needs, and provides a way to generate metadata that ensures others can trust that your archived content has not been tampered with.

Learn more about what sites the Auto Archiver can archive here.

The Future of Web Archiving

Archiving the web is hard, especially when logins, captchas, and other bot prevention systems are in place. We will do our best to keep improving our Auto Archiver, but we note that it should be just one of many tools in your researcher’s toolkit. You can explore a variety of other useful tools in the Bellingcat Open Source Investigation Toolkit.

Still, if you want to support us on this journey of archiving critical information, you can:

- Download and use this tool

- Donate directly to Bellingcat

- Test, give feedback, and develop new features in our GitHub

For newsrooms:

If you work in a newsroom or research team and want to access a demo or help to deploy the Auto Archiver internally you can reach us at contact-tech@bellingcat.com with the Subject “Auto Archiver at [my team/organisation]” and tell us more about your organisation and archiving needs. Building a greater adoption base is the best way to ensure the future of this tool and its versatility.

What Changed, What Remains

Subscribe to the Bellingcat newsletter

Subscribe to our newsletter for first access to our published content and events that our staff and contributors are involved with, including interviews and training workshops.

Now that we have given a broad overview of the tool and its changes, what follows is a deeper look at how different parts of it work and interact. This will likely be of greater benefit for more technical users, and we again stress that successful users of the tool will likely need some technical knowledge to set it up for the first time.

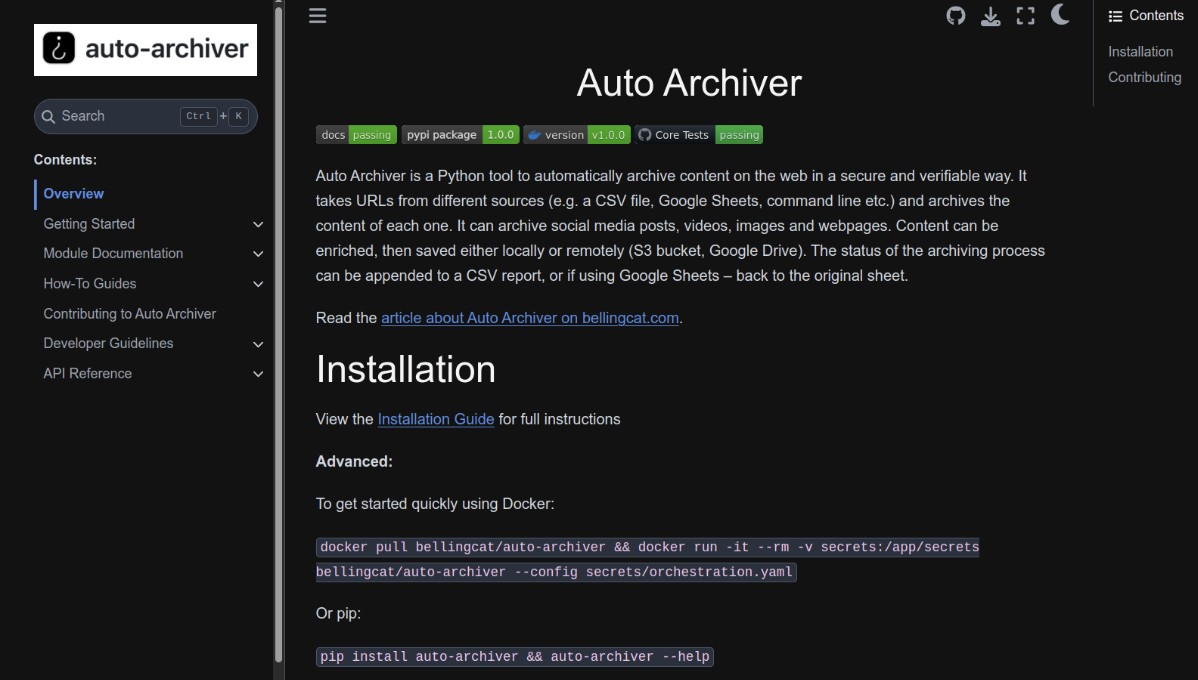

But help is available with our live Auto Archiver Documentation. This is where you will always find the latest information on how to install, configure or debug the tool. Even if some aspects mentioned in this article change in the coming years, the documentation will be your go-to space for the up to date instructions.

If you have questions or problems please open an issue on GitHub. That’s where others will also be going to for help and constitutes our shared knowledge space.

A New Architecture

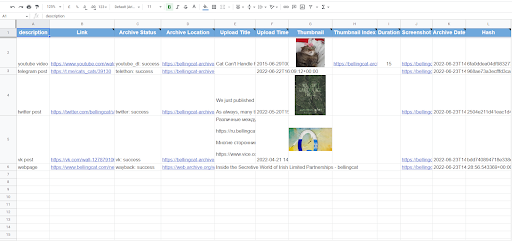

Many open source researchers, including at Bellingcat, favour using the Auto Archiver with the Google Sheets integration, which allows users to work collaboratively by adding links to a spreadsheet and letting the Auto Archiver run in the background. However, we have now made it simpler to integrate the Auto Archiver into other systems. One such example is ATLOS, a collaborative investigations platform that integrated the Auto Archiver and which has been used by Bellingcat and the Centre for Information Resilience.

Integration is possible via the new modular architecture of the Auto Archiver and can be seen in the two new projects that we recently made public under open source code licenses: the Auto Archiver API and the Auto Archiver Web Interface.

Modules are the building blocks of the archiving pipeline and tell the tool how to run. They detail where to find the URLs, which archiving techniques to use, what additional processing to carry out on archived content and where and how to store it. Each module falls into a specific class:

- Feeder modules specify where to read the URLs from. There’s one for Google Sheets, for example.

- Extractor modules download media and other metadata from a URL: our most versatile one is the Generic Extractor, which uses yt-dlp to download videos. However, extractors can be tailor made for specific platforms like the Telethon Extractor, which requires a Telegram account to download all media and metadata from the messages in public or private chats an account has joined.

- Enricher modules increase the value of the archived content with additional information or checks, such as hashing or timestamping the content for future consistency or chain of custody validations.

- Formatter modules collect and display the result of the process in a single formatted output. We use the HTML Formatter, as shown in this Bluesky post example.

- Storage modules tell the tool where to put the files it downloaded or generated. The easiest is to store it locally. But to ensure better preservation the best practice is to use cloud storages like S3 or Google Drive.

- Database modules simply indicate where to save a record of this archive, such as whether archival was successful and which methods were used. You can use a CSV file and Google Sheets, for example.

The modules documentation can be found here and it is there to help you understand how each module works and is configured. Configuring which modules to use is done via a YAML file. If you are not comfortable with those, we have you covered with a new interface called the configuration editor where you can visually create or edit your modules configuration. In fact, the first time you run the Auto Archiver a minimal working YAML configuration file is generated which you can use straight away to read URLs from the command line and store archived content locally.

Some platforms rate-limit or outright block IPs based on inauthentic behaviour. One of the strategies we employ to circumvent that is sending traffic through a proxy, which you can configure in specific modules like the Generic Extractor . We have been using Oxylab’s Residential Proxies as part of their Project 4beta successfully for over a year, but know that there are several good providers out there.

If you are a developer, you can design new modules as needed using Python code, and we welcome it if you want to contribute those back to our code. Imagine a Feeder that is constantly scraping URLs from a Bluesky account, or an Enricher that uses an AI model to detect and blur graphic content. All of that is possible and easy to build in this new architecture.

We hope you will enjoy the updated tool.

Please give us any feedback or suggestions for improvements by contacting us via contact-tech@bellingcat.com.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Bluesky here and Instagram here.