India-Pakistan Conflict: How a Deepfake Video Made it Mainstream

India and Pakistan have been trading blows in the wake of a militant attack on tourists in Indian-administered Kashmir last month.

On May 7, India said it had launched missile strikes in Pakistan and Pakistan-administered Kashmir. Pakistan – which denies any involvement in the April attack on the tourists, most of whom were Indian – then claimed to have shot down Indian drones and jets.

Claims and counterclaims of ongoing strikes and attacks have been forthcoming from both sides. Some have been difficult to immediately and independently verify, creating a vacuum that has enabled the spread of disinformation.

For example, on May 8, a deepfake video of US President Donald Trump appearing to state that he would “destroy Pakistan” was quickly debunked by Indian fact-checkers. Its impact was therefore minimal.

However, the same cannot be said of another deepfake video spotted by Bellingcat and, by the time of publication, at least one Pakistani outlet.

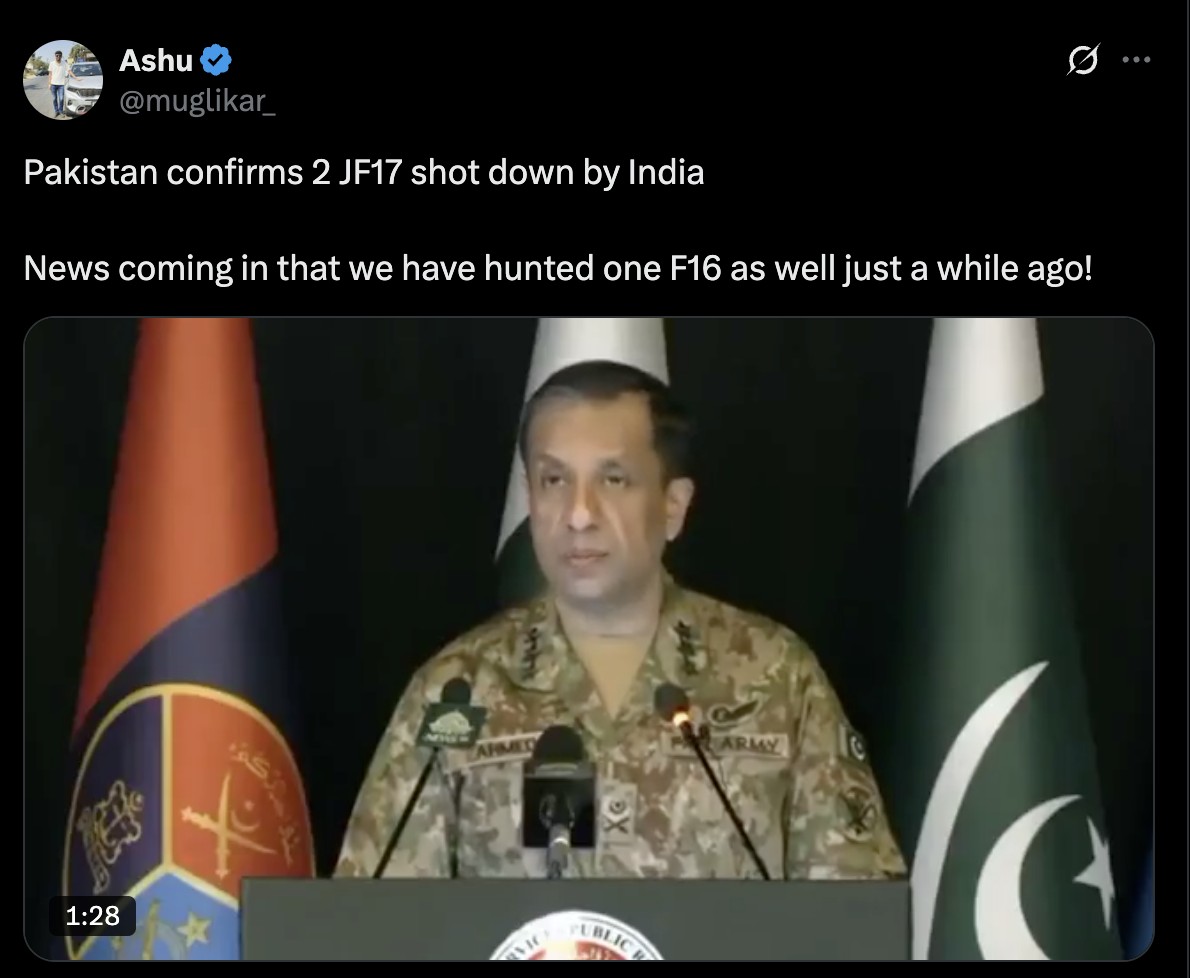

The altered video had been shared on X (formerly Twitter) nearly 700,000 times at the time of publication and purports to show a General in the Pakistani army, Ahmed Sharif Chaudhry, saying that Pakistan had lost two of its aircraft.

A Community Note was later added to the video on X detailing it as an “AI generated deepfake”.

However, several Indian media companies had already picked up and ran with the story, including large outlets like NDTV. Other established news media that featured quotes from the altered footage in their coverage include The Free Press Journal, The Statesman and Firstpost.

Bellingcat was able to debunk the video by finding another clip of the same press conference from last year. The video confirms that a different audio was added over the original footage, with Chaudhry’s lips appearing to sync with the altered audio.

The position of the microphones, Chaudhry’s position in relation to the flags, and his movements are identical. Both videos cut to the audience which is also the same.

You can see the video published on Facebook in 2024 here and the manipulated video published on X here.

Mohammed Zubair, co-founder of Indian fact-checking organisation Alt News, told Bellingcat that mis-and-disinformation are commonly found on Indian social media. But while it may be easy enough for trained fact-checkers to debunk a deepfake where an old video is recycled and the audio manipulated, Zubair was concerned that the common public may just hit the share button because of its emotional appeal. “It is actually very worrisome because it looks very convincing,” he said.

Bellingcat contacted NDTV, The Free Press Journal, The Statesman and Firstpost about the details of this story but did not receive a response before publication.

NDTV and The Statesman later deleted their reports without clarification. Yet experts warn videos like these act as a warning to the continued and evolving dangers of disinformation.

Rachel Moran, a senior research scientist at the University of Washington’s Center for an Informed Public, told Bellingcat that the speed with which such videos can be created and posted brings a new challenge.

“In crisis periods, the information environment is already muddied as we try to distinguish rumours from facts at speed,” Moran said. “The fact that we now have high-quality fake videos in the mix only makes this process more taxing, less certain and can distract us from important true information.”

Correction: This article was amended on May 9 to clarify that the Facebook video of Chaudhry was published in 2024 and not 2025.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Bluesky here and Mastodon here.